Final ID: MP2129

A Novel Framework for End-Diastolic and End-Systolic Frame Localization in Contrast and Non-Contrast Echocardiography Without Manual Annotations

Abstract Body (Do not enter title and authors here): Background: End-diastolic (ED) and end-systolic (ES) frames are critical for left ventricular (LV) volume measurements in echocardiography but show high inter- and intra-observer variability. Deep learning (DL) methods have emerged for ED/ES detection; however, these typically rely on manually annotated reference frames and often fail to generalize across different image types, such as contrast and non-contrast echocardiographic views.

Methods: A fully automated novel framework was developed for localizing ED/ES frames in both contrast and non-contrast cine loops without the use of manual annotations. The process begins with the YOLO (v12) object detection DL model to identify the LV as a region of interest (ROI); alternatively, a fixed bounding box or no localization step may be used. The largest ROI is selected to crop the cine loop. Robust principal component analysis is then applied to decompose the cine into a low-rank matrix, followed by singular value decomposition to extract the top three left singular vectors (U). Pseudo-periodic cardiac cycles are identified in each U using the Spectral Dominance Ratio. Zero-crossings and their variances are computed, and the U with the lowest variance (with at least two cycles) is chosen to represent the cardiac cycle. A peak detection algorithm is used to identify local extrema corresponding to the ED/ES frames.

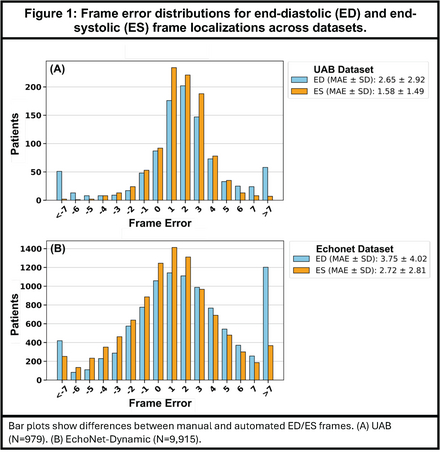

Results: The method was validated using a UAB dataset (N=984; 912 contrast, 72 non-contrast) and the publicly available EchoNet-Dynamic dataset (N=10,030, non-contrast) for external validation. The YOLO model was trained exclusively on the UAB dataset (1394 images for training, 298 images for validation, and 300 images for testing). On the UAB test set, the model achieved a mean Average Precision (mAP50) of 0.994 and mAP50-95 of 0.717. Mean absolute errors (MAE) in the UAB dataset were 2.65 ± 2.95 frames (median 2) for ED and 1.58 ± 1.49 frames (median 1) for ES. In the EchoNet dataset, the MAE was 3.75 ± 4.02 frames (median 2) for ED and 2.72 ± 2.81 frames (median 2) for ES. The framework excluded 5 UAB and 115 EchoNet cases due to only one cardiac cycle in the U.

Conclusion: A robust and generalizable framework has been presented for localizing ED/ES frames without reliance on manually labeled training data. This approach supports both contrast and non-contrast images and can function with or without DL-based ROI detection, offering a scalable fully automated solution for echocardiographic analysis.

Methods: A fully automated novel framework was developed for localizing ED/ES frames in both contrast and non-contrast cine loops without the use of manual annotations. The process begins with the YOLO (v12) object detection DL model to identify the LV as a region of interest (ROI); alternatively, a fixed bounding box or no localization step may be used. The largest ROI is selected to crop the cine loop. Robust principal component analysis is then applied to decompose the cine into a low-rank matrix, followed by singular value decomposition to extract the top three left singular vectors (U). Pseudo-periodic cardiac cycles are identified in each U using the Spectral Dominance Ratio. Zero-crossings and their variances are computed, and the U with the lowest variance (with at least two cycles) is chosen to represent the cardiac cycle. A peak detection algorithm is used to identify local extrema corresponding to the ED/ES frames.

Results: The method was validated using a UAB dataset (N=984; 912 contrast, 72 non-contrast) and the publicly available EchoNet-Dynamic dataset (N=10,030, non-contrast) for external validation. The YOLO model was trained exclusively on the UAB dataset (1394 images for training, 298 images for validation, and 300 images for testing). On the UAB test set, the model achieved a mean Average Precision (mAP50) of 0.994 and mAP50-95 of 0.717. Mean absolute errors (MAE) in the UAB dataset were 2.65 ± 2.95 frames (median 2) for ED and 1.58 ± 1.49 frames (median 1) for ES. In the EchoNet dataset, the MAE was 3.75 ± 4.02 frames (median 2) for ED and 2.72 ± 2.81 frames (median 2) for ES. The framework excluded 5 UAB and 115 EchoNet cases due to only one cardiac cycle in the U.

Conclusion: A robust and generalizable framework has been presented for localizing ED/ES frames without reliance on manually labeled training data. This approach supports both contrast and non-contrast images and can function with or without DL-based ROI detection, offering a scalable fully automated solution for echocardiographic analysis.

More abstracts on this topic:

18F-FDG PET/CT Evaluation of Incidental Extracardiac CT Findings on 82Rb-Chloride PET Myocardial Perfusion Imaging

Dhaliwal Jasmeet, David Sthuthi, Puente Cesar, Nandakumar Menon, Sayre James, Zhong Jin, Berenji Gholam, Packard Rene

A Multicenter Friedreich Ataxia Registry Identifies Posterior Wall Thickness as a Predictor of Major Adverse Cardiac EventsLin Kimberly, Johnson Jonathan, Mccormack Shana, Lynch David, Tate Barbara, Feng Yixuan, Huang Jing, Mercer-rosa Laura, Dedio Anna, Mcsweeney Kara, Fournier Anne, Yoon Grace, Payne Ronald, Cripe Linda, Patel Aarti, Niaz Talha