Final ID: MP1292

Analysis of Discrepancies Between Radiologist and AI CCTA Readings Across Clinical Thresholds

Abstract Body (Do not enter title and authors here): Background

CCTA informs clinical decisions with stenosis thresholds that guide treatment, testing, and insurance approval. AI-based tools may reduce interpretative variability, but real-world consistency with radiologist assessments remains ambiguous. This study evaluates the magnitude and clinical impact of segment-level disagreement between radiologist- and AI-read CCTA.

Methods

A single-center retrospective study of 48 patients who underwent CCTA with a radiologist and AI interpretation recorded percent stenosis across 16 coronary segments. Analysis excluded non-diseased segments identified by both reads. We calculated mean absolute difference (MAD) and threshold disagreement (TD), with thresholds defined at 50% and 70% stenosis. Data was labeled as an upward single- or double-crossing (USC; UDC), defined as stenosis differing by one threshold (eg. <50% vs. 50-69% or 50-69% vs. ≥70%) or both thresholds (eg. <50% vs. ≥70%), respectively. These terms describe the direction of difference, not accuracy.

Results

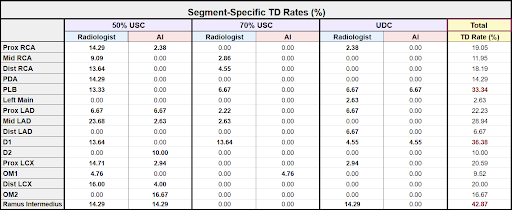

Across 395 segments, the overall MAD between the radiologist and AI readings was 20.09% (SD = 6.06%). Ramus Intermedius had the highest MAD (30.0%) and segment-specific TD rate (42.86%). Distal LAD (29.5%) and PLB (28.9%) also showed high MADs (Figure 1 and Table 1).

There were 75 TDs (18.99%), including 63 with a USC (50% = 54; 70% = 9) and 12 with a UDC. At 50%, radiologist USC occurred in 43 segments, and AI USC was in 11 segments. At 70%, radiologist USC occurred in 8 and AI USC in 1. Radiologists also produced more UDCs vs. AI (10 vs. 2). Chi-square testing showed significantly more frequent USCs by radiologists at the 50% threshold (chi-square = 20.37, p < 0.0001, OR = 3.91). Fisher’s exact test displayed near significance at the 70% threshold (p ~ 0.05, OR = 8.00) and significantly more UDCs by radiologists (p < 0.05, OR = 5.00) compared to AI (Figure 2).

Conclusion

Variation exists between radiologist- and AI-read CCTA, with nearly 1 in 5 segments having a TD. Posterior coronary segment readings, where anatomical limitations may challenge visualization, had the most inconsistencies. Discrepancies were most common in borderline and high-stakes ranges, where clinical decisions and insurance coverage hinge on stenosis classification. Findings support further validation of AI and caution against sole reliance. Comparative studies using heart catheterization are warranted to comment on accuracy versus variation.

CCTA informs clinical decisions with stenosis thresholds that guide treatment, testing, and insurance approval. AI-based tools may reduce interpretative variability, but real-world consistency with radiologist assessments remains ambiguous. This study evaluates the magnitude and clinical impact of segment-level disagreement between radiologist- and AI-read CCTA.

Methods

A single-center retrospective study of 48 patients who underwent CCTA with a radiologist and AI interpretation recorded percent stenosis across 16 coronary segments. Analysis excluded non-diseased segments identified by both reads. We calculated mean absolute difference (MAD) and threshold disagreement (TD), with thresholds defined at 50% and 70% stenosis. Data was labeled as an upward single- or double-crossing (USC; UDC), defined as stenosis differing by one threshold (eg. <50% vs. 50-69% or 50-69% vs. ≥70%) or both thresholds (eg. <50% vs. ≥70%), respectively. These terms describe the direction of difference, not accuracy.

Results

Across 395 segments, the overall MAD between the radiologist and AI readings was 20.09% (SD = 6.06%). Ramus Intermedius had the highest MAD (30.0%) and segment-specific TD rate (42.86%). Distal LAD (29.5%) and PLB (28.9%) also showed high MADs (Figure 1 and Table 1).

There were 75 TDs (18.99%), including 63 with a USC (50% = 54; 70% = 9) and 12 with a UDC. At 50%, radiologist USC occurred in 43 segments, and AI USC was in 11 segments. At 70%, radiologist USC occurred in 8 and AI USC in 1. Radiologists also produced more UDCs vs. AI (10 vs. 2). Chi-square testing showed significantly more frequent USCs by radiologists at the 50% threshold (chi-square = 20.37, p < 0.0001, OR = 3.91). Fisher’s exact test displayed near significance at the 70% threshold (p ~ 0.05, OR = 8.00) and significantly more UDCs by radiologists (p < 0.05, OR = 5.00) compared to AI (Figure 2).

Conclusion

Variation exists between radiologist- and AI-read CCTA, with nearly 1 in 5 segments having a TD. Posterior coronary segment readings, where anatomical limitations may challenge visualization, had the most inconsistencies. Discrepancies were most common in borderline and high-stakes ranges, where clinical decisions and insurance coverage hinge on stenosis classification. Findings support further validation of AI and caution against sole reliance. Comparative studies using heart catheterization are warranted to comment on accuracy versus variation.

More abstracts on this topic:

A Site-by-Site Comparison of CT Attenuation, Effective Atomic Numbers, and Electron Densities of Focal Pericoronary Adipose Tissue and Its Relationship to Adjacent Coronary Plaques on Contrast Enhanced Spectral CT

Kaneko Aya, Sakaguchi Yamato, Funabashi Nobusada

4D Flow MRI Evaluation of Cardiovascular Risk-Related Alterations in Heart-Brain Hemodynamics in Cognitively Healthy Aging AdultsNajafi Anahita, Rogalski Emily, Jarvis Kelly, Richter Adam, Lytchakov Anna, Benson Theresa, Jin Ning, Davids Rachel, Schnell Susanne, Ragin Ann, Weintraub Sandra