Final ID: 4366656

An Ensemble AI-ECG Model for Detecting a Composite of Structural Heart Disease Had Higher Test-Retest Reliability than Disease-specific Tools Across Diverse Clinical Settings

Abstract Body (Do not enter title and authors here): Background: The scientific literature on artificial intelligence-enabled electrocardiography (AI-ECG) has defined a robust performance of AI models in detecting and predicting several structural heart disorders (SHDs) using ECGs. However, as a diagnostic test, the real-world clinical utility of AI-ECG reliability requires the consistency of its results when repeated under similar conditions.

Aim: To evaluate the reliability of AI-ECG models for different ECGs for the same person, across different diagnostic labels, and using varied modeling approaches.

Methods: We used ECG images (2000-2024) from 5 hospitals and an outpatient network within a large, integrated US health system. For each individual, we identified multiple ECGs recorded within a 30-day period. We evaluated 7 models: 6 convolutional neural networks (CNNs) trained to detect individual SHDs, including LV systolic dysfunction, left valve diseases and severe LVH; an ensemble XGBoost integrating individual CNNs as a composite screen for multiple SHDs. We used concordance correlation coefficient (CCC), Spearman correlation, Cohen’s kappa, and percent agreement in binary screen status to test model reliability. We evaluated factors associated with different AI-ECG outputs (Δ probability> 0.5) and assessed stability across ECG layouts (digital, printed, photo).

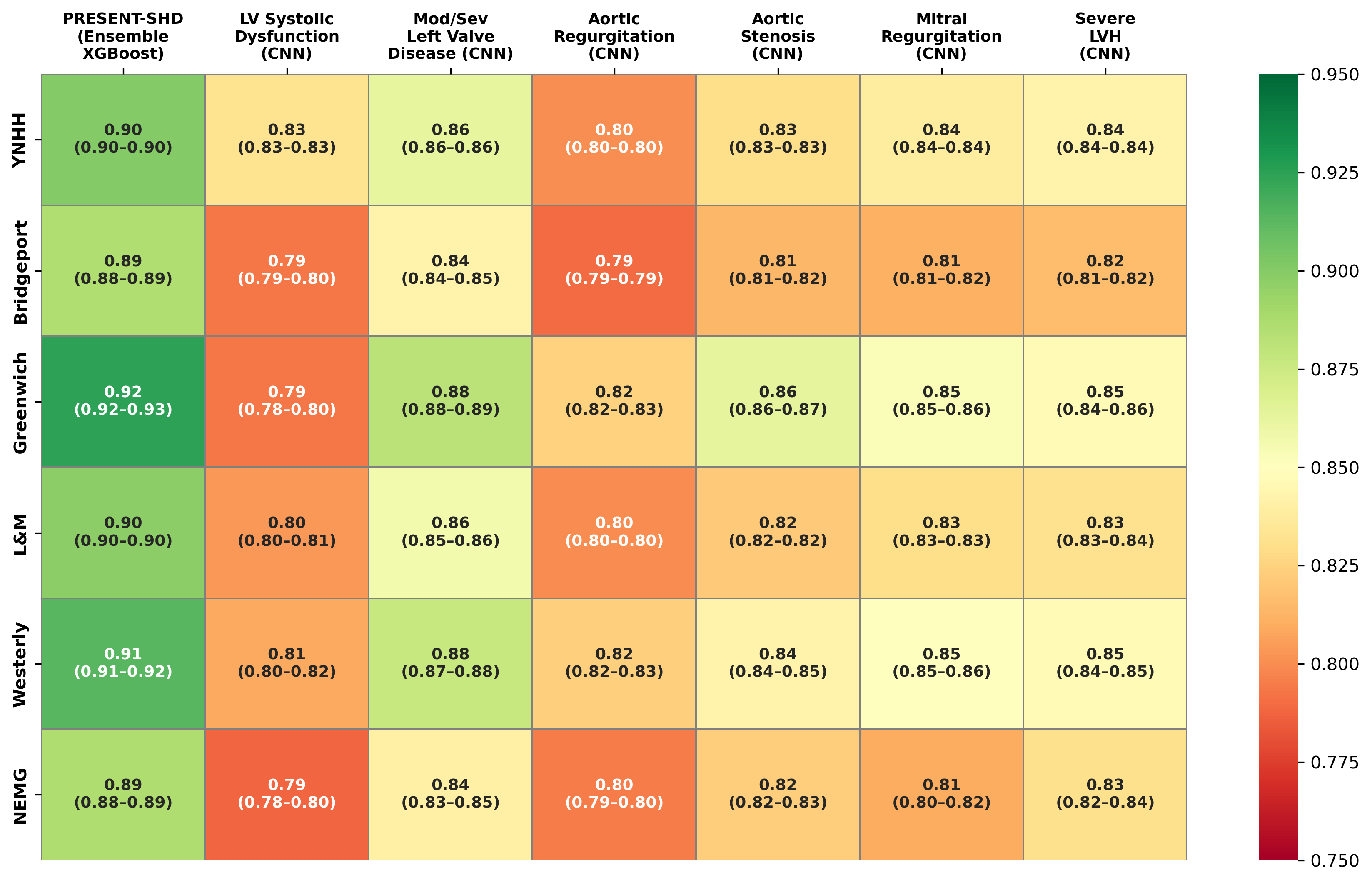

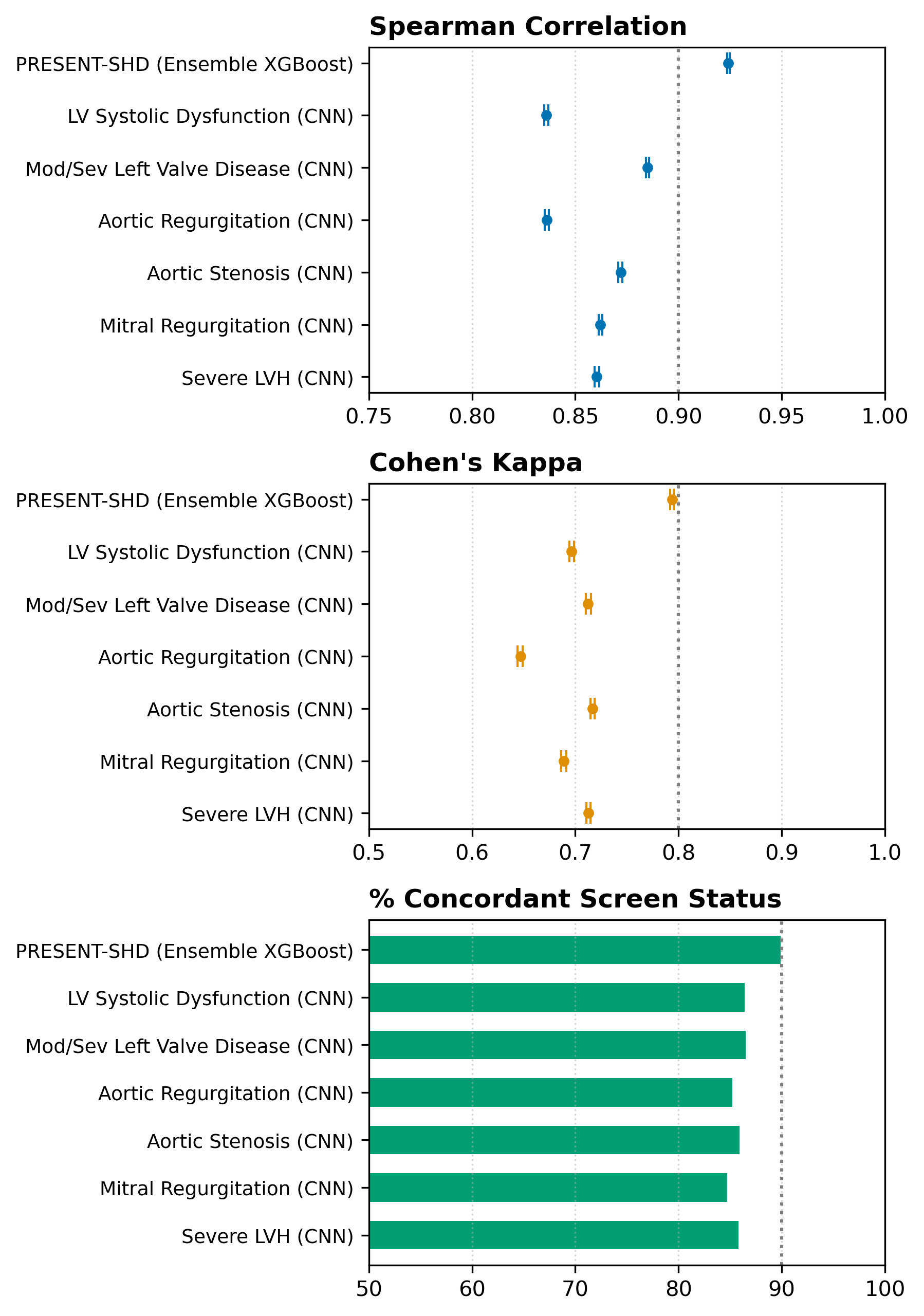

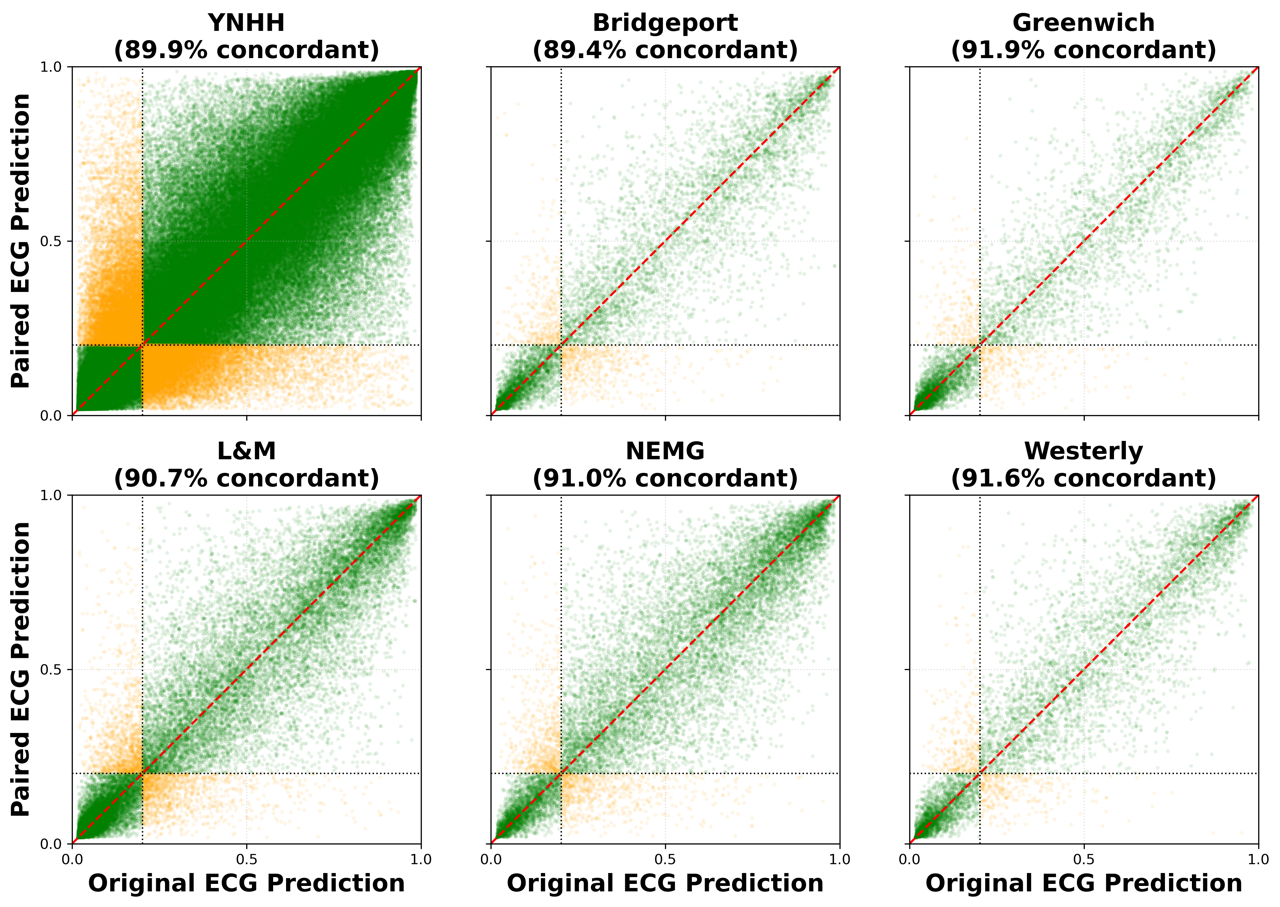

Results: Across sites, we identified 1,118,263 ECG pairs, with a median 1 (1-3) days between ECGs. The ensemble XGBoost had the higher test-retest correlation (CCC: 0.89-0.92) and agreement (kappa: 0.75-0.82) between pairs compared with CNNs (CCC: 0.78-0.88; kappa: 0.57-0.72). After adjusting for demographics, ECG pairs that included one or both inpatient ECG were significantly more likely to yield unstable predictions (ORs: 1.60 [1.50-1.70] and 1.91 [1.78-2.05], respectively) compared with pairs with both ECGs obtained in outpatient settings. Among outpatient pairs across sites, the XGBoost model had a CCC of 0.89-0.94, a Spearman correlation of 0.90-0.94, and a kappa of 0.78-0.84, with concordance rates of 89-92%. Notably, ensemble model predictions were also stable across different ECG layouts.

Conclusion: An ensemble AI-ECG model integrating multiple CNN predictions had higher reliability compared with models for individual disorders. Discordance was more common in inpatient ECGs, suggesting instability in high-acuity settings. Reliable ensemble AI-ECG model outputs support readiness for clinical implementation for SHD screening.

Aim: To evaluate the reliability of AI-ECG models for different ECGs for the same person, across different diagnostic labels, and using varied modeling approaches.

Methods: We used ECG images (2000-2024) from 5 hospitals and an outpatient network within a large, integrated US health system. For each individual, we identified multiple ECGs recorded within a 30-day period. We evaluated 7 models: 6 convolutional neural networks (CNNs) trained to detect individual SHDs, including LV systolic dysfunction, left valve diseases and severe LVH; an ensemble XGBoost integrating individual CNNs as a composite screen for multiple SHDs. We used concordance correlation coefficient (CCC), Spearman correlation, Cohen’s kappa, and percent agreement in binary screen status to test model reliability. We evaluated factors associated with different AI-ECG outputs (Δ probability> 0.5) and assessed stability across ECG layouts (digital, printed, photo).

Results: Across sites, we identified 1,118,263 ECG pairs, with a median 1 (1-3) days between ECGs. The ensemble XGBoost had the higher test-retest correlation (CCC: 0.89-0.92) and agreement (kappa: 0.75-0.82) between pairs compared with CNNs (CCC: 0.78-0.88; kappa: 0.57-0.72). After adjusting for demographics, ECG pairs that included one or both inpatient ECG were significantly more likely to yield unstable predictions (ORs: 1.60 [1.50-1.70] and 1.91 [1.78-2.05], respectively) compared with pairs with both ECGs obtained in outpatient settings. Among outpatient pairs across sites, the XGBoost model had a CCC of 0.89-0.94, a Spearman correlation of 0.90-0.94, and a kappa of 0.78-0.84, with concordance rates of 89-92%. Notably, ensemble model predictions were also stable across different ECG layouts.

Conclusion: An ensemble AI-ECG model integrating multiple CNN predictions had higher reliability compared with models for individual disorders. Discordance was more common in inpatient ECGs, suggesting instability in high-acuity settings. Reliable ensemble AI-ECG model outputs support readiness for clinical implementation for SHD screening.

More abstracts on this topic:

A Diagnosis Dilemma of Positional Hypoxia: Scoliosis-Mediated Platypnea-Orthodeoxia Syndrome

Ademuwagun Christianah, Arjoon Roy, Seth Paula, Chang Gene, Ibe Oby

A Novel ECG Time-Frequency Eyeball Method for Robust Detection of Myocardial Infarction from Single-Channel ECG: A Preclinical StudyAlavi Rashid, Li Jiajun, Dai Wangde, Matthews Ray, Pahlevan Niema, Kloner Robert, Gharib Morteza