Final ID: MP1541

Comparative Evaluation of US- and China-developed Large Language Models for Bilingual Coronary Heart Disease Patient Education

Abstract Body (Do not enter title and authors here): Background: Coronary heart disease (CHD) remains the leading global cause of mortality and imposes substantial clinical and socioeconomic burdens. Patient education significantly improves health outcomes but is limited by resource constraints. Large language models (LLMs) offer scalable solutions for patient counselling, but their performance may vary by the language of the prompts and the region of model development. This study performs the first systematic bilingual (English and Chinese) evaluation of leading US- and China-developed LLMs for CHD education.

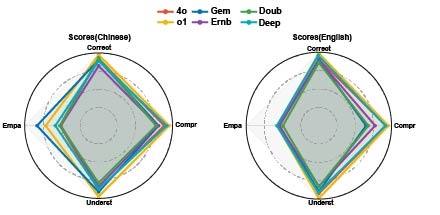

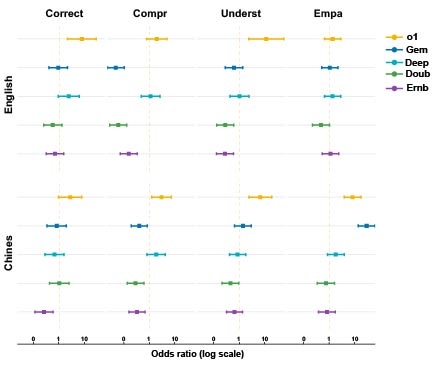

Methods: We assessed six widely used LLMs—GPT-4o, OpenAI o1, Gemini 1.5 (US-developed), and DeepSeek-R1, Doubao, ERNIE Bot 3.5 (China-developed)—using 30 bilingual CHD-related questions covering prevention, diagnosis, and management. Each response was independently rated by three cardiologists on four dimensions: correctness, comprehensiveness, understandability, and empathy. Scores were analyzed using cumulative-link mixed models (CLMM) adjusting for question and rater variability, with pairwise contrasts to identify specific differences.

Results: A total of 360 bilingual responses (4320 individual ratings) demonstrated high inter-rater reliability (Fleiss' κ=0.821). GPT-4o and OpenAI o1 achieved the highest overall scores. Correctness emerged as the strongest evaluation dimension, while empathy showed the greatest variability among models. Significant language-model interactions indicated that, surprisingly, Chinese-developed models (DeepSeek-R1, ERNIE Bot 3.5) performed better when responding in English rather than Chinese. Pairwise contrasts highlighted that OpenAI o1 significantly outperformed GPT-4o in correctness (English OR 8.07, 95 % CI 2.11–30.88; Chinese OR 2.78, 95 % CI 0.94–8.25) and understandability (English OR 12.21, 95 % CI 2.37–62.91; Chinese OR 7.07, 95 % CI 2.45–20.43). Gemini exhibited exceptional empathy specifically in Chinese (OR 31.69, 95 % CI 14.50–69.24).

Conclusions: Our comprehensive bilingual analysis identifies substantial performance variability influenced by model architecture and language specificity. Models with chain-of-thought reasoning, such as OpenAI o1 and DeepSeek-R1, hold significant promise for clinical application. Performance declines observed with Chinese prompts in China-developed models reflect a lack of high-quality Chinese medical corpora. Establishing robust multilingual medical databases is essential for improving cross-linguistic reliability and safety.

Methods: We assessed six widely used LLMs—GPT-4o, OpenAI o1, Gemini 1.5 (US-developed), and DeepSeek-R1, Doubao, ERNIE Bot 3.5 (China-developed)—using 30 bilingual CHD-related questions covering prevention, diagnosis, and management. Each response was independently rated by three cardiologists on four dimensions: correctness, comprehensiveness, understandability, and empathy. Scores were analyzed using cumulative-link mixed models (CLMM) adjusting for question and rater variability, with pairwise contrasts to identify specific differences.

Results: A total of 360 bilingual responses (4320 individual ratings) demonstrated high inter-rater reliability (Fleiss' κ=0.821). GPT-4o and OpenAI o1 achieved the highest overall scores. Correctness emerged as the strongest evaluation dimension, while empathy showed the greatest variability among models. Significant language-model interactions indicated that, surprisingly, Chinese-developed models (DeepSeek-R1, ERNIE Bot 3.5) performed better when responding in English rather than Chinese. Pairwise contrasts highlighted that OpenAI o1 significantly outperformed GPT-4o in correctness (English OR 8.07, 95 % CI 2.11–30.88; Chinese OR 2.78, 95 % CI 0.94–8.25) and understandability (English OR 12.21, 95 % CI 2.37–62.91; Chinese OR 7.07, 95 % CI 2.45–20.43). Gemini exhibited exceptional empathy specifically in Chinese (OR 31.69, 95 % CI 14.50–69.24).

Conclusions: Our comprehensive bilingual analysis identifies substantial performance variability influenced by model architecture and language specificity. Models with chain-of-thought reasoning, such as OpenAI o1 and DeepSeek-R1, hold significant promise for clinical application. Performance declines observed with Chinese prompts in China-developed models reflect a lack of high-quality Chinese medical corpora. Establishing robust multilingual medical databases is essential for improving cross-linguistic reliability and safety.

More abstracts on this topic:

A Chemical Language Model for the Design of De Novo Molecules Targeting the Inhibition of TLR3

Velasco Juan

Cardiopulmonary Resuscitation Knowledge Among High School Students After Basic Life Support Training. Results from the “Kids Save Lives” project in the Canary Islands, SpainJorge-perez Pablo, Martin-cabeza Marta, Lobato-gonzalez Jose Francisco, Gomez Martinez Vctor, Melo-rodriguez Irene, Jorge Ana, Gomez-gonzalez Alejandro, Castro-martin Jorge Joaquín, Quijada-fumero Alejandro