Final ID: Mo2179

ChatGPT-4 Improves Readability of Institutional Heart Failure Patient Education Materials

Abstract Body (Do not enter title and authors here): Introduction:

Heart failure consists of complex management involving lifestyle modifications such as daily weights, fluid and sodium restriction, and blood pressure monitoring placing additional responsibility on patients and caregivers. Successful adherence requires comprehensive counseling and understandable patient education materials (PEMs). Prior research has shown that many PEMs related to cardiovascular disease exceed the American Medical Association’s 5th-6th grade recommended reading level. The large language model (LLM) Chat Generative Pre-trained Transformer (ChatGPT) may be a useful adjunct resource for patients with heart failure to bridge this gap.

Research Question:

Can ChatGPT-4 improve heart failure institutional PEMs to meet the AMA’s recommended 5th-6th grade reading level while maintaining accuracy and comprehensiveness?

Methods:

There were 143 heart failure PEMs collected from the websites of the top 10 institutions listed on the 2022-2023 US News & World Report for “Best Hospitals for Cardiology, Heart & Vascular Surgery”. The PEMs of each institution were entered into ChatGPT-4 (Version updated 20 July 2023) preceded by the prompt “please explain the following in simpler terms”. The readability of the institutional PEM and ChatGPT prompted response were both assessed using Textstat library in Python and the Textstat readability package in R software. The accuracy and comprehensiveness of each response were also assessed by a board-certified cardiologist.

Results:

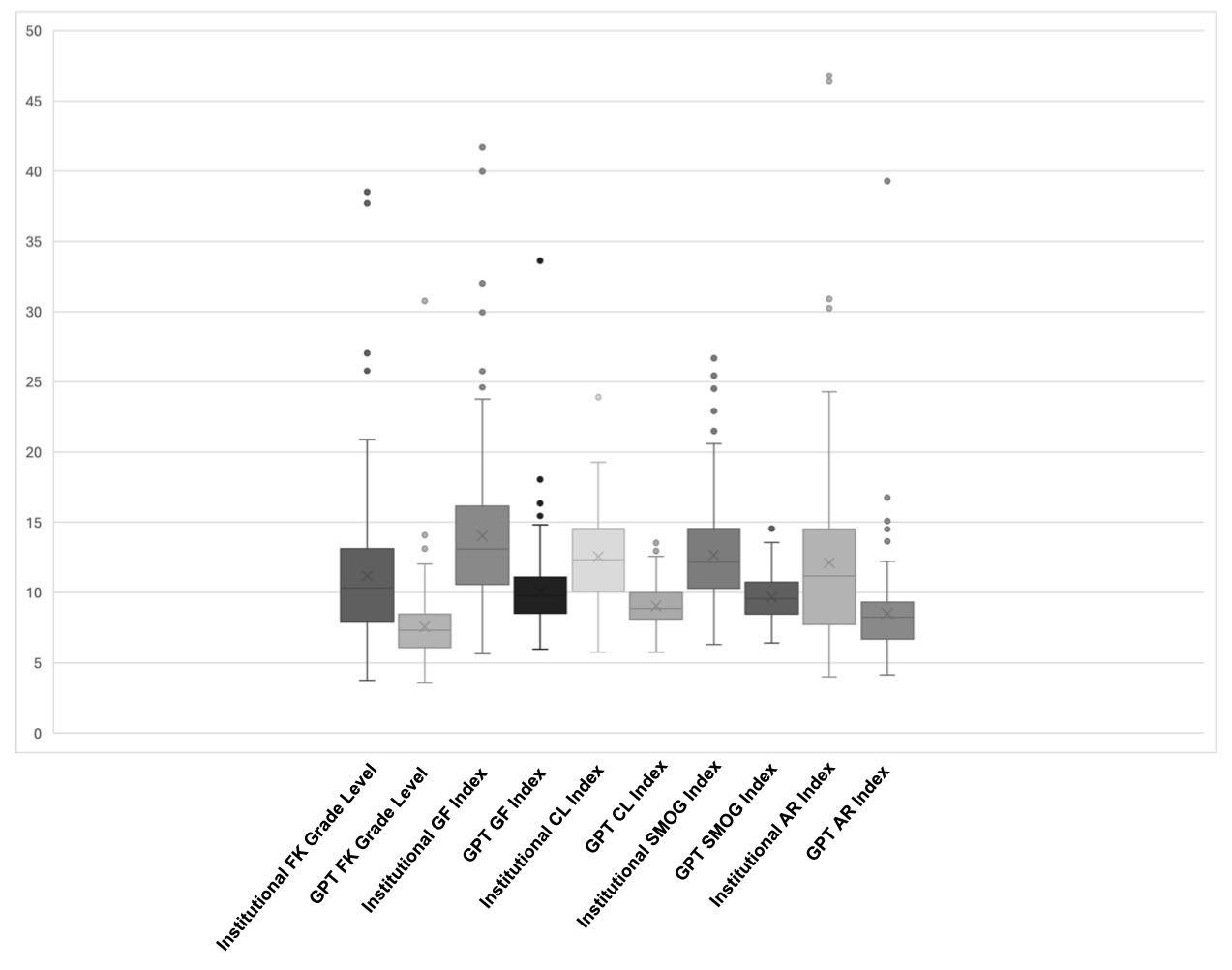

The average Flesch-Kincaid grade reading level was 10.3 (IQR: 7.9, 13.1) vs 7.3 (IQR: 6.1, 8.5) for institutional PEMs and ChatGPT responses (p< 0.001), respectively. There were 13/143 (9.1%) institutional PEMs meeting a 6th grade reading level which improved to 33/143 (23.1%) after prompting by ChatGPT-4. There was also a significant difference found for each readability metric assessed when comparing institutional PEMs with ChatGPT-4 responses (p<0.001). While improving readability, ChatGPT-4 maintained accuracy and comprehensiveness with no responses graded as less accurate or less comprehensive and 33/143 (23.1%) deemed more comprehensive than institutional PEMs.

Conclusion:

ChatGPT-4 significantly improved the readability metrics of heart failure PEMs. The model may be a promising adjunct resource in addition to care provided by a licensed healthcare professional for patients with heart failure leading to improved health literacy and potentially improved outcomes.

Heart failure consists of complex management involving lifestyle modifications such as daily weights, fluid and sodium restriction, and blood pressure monitoring placing additional responsibility on patients and caregivers. Successful adherence requires comprehensive counseling and understandable patient education materials (PEMs). Prior research has shown that many PEMs related to cardiovascular disease exceed the American Medical Association’s 5th-6th grade recommended reading level. The large language model (LLM) Chat Generative Pre-trained Transformer (ChatGPT) may be a useful adjunct resource for patients with heart failure to bridge this gap.

Research Question:

Can ChatGPT-4 improve heart failure institutional PEMs to meet the AMA’s recommended 5th-6th grade reading level while maintaining accuracy and comprehensiveness?

Methods:

There were 143 heart failure PEMs collected from the websites of the top 10 institutions listed on the 2022-2023 US News & World Report for “Best Hospitals for Cardiology, Heart & Vascular Surgery”. The PEMs of each institution were entered into ChatGPT-4 (Version updated 20 July 2023) preceded by the prompt “please explain the following in simpler terms”. The readability of the institutional PEM and ChatGPT prompted response were both assessed using Textstat library in Python and the Textstat readability package in R software. The accuracy and comprehensiveness of each response were also assessed by a board-certified cardiologist.

Results:

The average Flesch-Kincaid grade reading level was 10.3 (IQR: 7.9, 13.1) vs 7.3 (IQR: 6.1, 8.5) for institutional PEMs and ChatGPT responses (p< 0.001), respectively. There were 13/143 (9.1%) institutional PEMs meeting a 6th grade reading level which improved to 33/143 (23.1%) after prompting by ChatGPT-4. There was also a significant difference found for each readability metric assessed when comparing institutional PEMs with ChatGPT-4 responses (p<0.001). While improving readability, ChatGPT-4 maintained accuracy and comprehensiveness with no responses graded as less accurate or less comprehensive and 33/143 (23.1%) deemed more comprehensive than institutional PEMs.

Conclusion:

ChatGPT-4 significantly improved the readability metrics of heart failure PEMs. The model may be a promising adjunct resource in addition to care provided by a licensed healthcare professional for patients with heart failure leading to improved health literacy and potentially improved outcomes.

More abstracts on this topic:

A Cross-scale Causal Machine Learning Framework Pinpoints Mgl2+ Macrophage Orchestrators of Balanced Arterial Growth

Han Jonghyeuk, Kong Dasom, Schwarz Erica, Takaesu Felipe, Humphrey Jay, Park Hyun-ji, Davis Michael E

A Deep Learning Topic Analysis Approach for Enhancing Risk Assessment in Heart Failure Using Unstructured Clinical NotesAdejumo Philip, Pedroso Aline, Khera Rohan